Local AI, Easier Than Ever: Ollama Lands on Windows

The arrival of Ollama’s new app on Windows marks a turning point for local artificial intelligence. This innovative application makes advanced, customizable large language models (LLMs) accessible to everyone—from newcomers to AI enthusiasts—without the dependency on the cloud. Most importantly, it empowers users by ensuring data privacy and offering complete control over AI interactions.

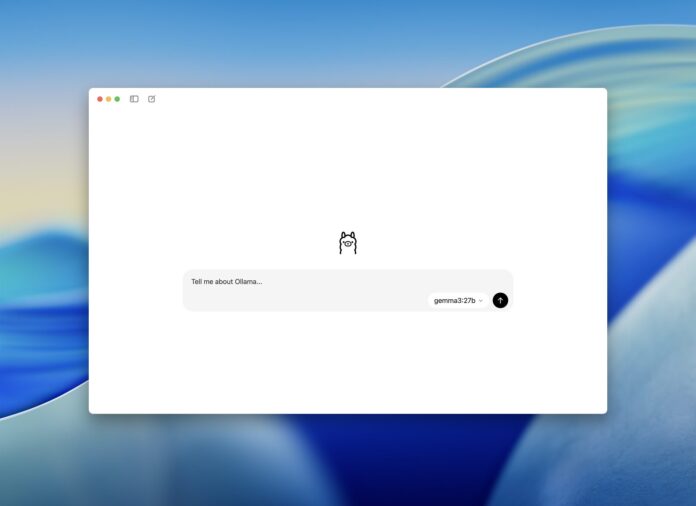

Because the app is designed with simplicity in mind, it streamlines the integration of powerful AI into your daily workflow. In addition, the minimalistic yet intuitive interface allows seamless navigation, making it easier than ever to harness the capabilities of local AI. As illustrated in the Ollama blog announcement, this launch is not just an upgrade—it represents a paradigm shift in how AI can be deployed directly on your device.

What is Ollama?

Ollama is a robust open-source platform that enables users to run powerful large language models (LLMs) directly on their devices. Initially available via the command-line on macOS and Linux, its evolution onto the Windows platform signals a remarkable expansion for the user community. This transition is important because it brings the power of direct AI computations right onto the world’s most popular operating system.

In addition to its technical prowess, Ollama’s design philosophy emphasizes user-friendliness and security. Developers, researchers, and casual users alike can now take advantage of local AI without wrestling with complicated setups. Besides that, the platform’s flexibility means it can be adapted to various use cases, from document summarization to code analysis.

Simplified LLM Experience: New Features at a Glance

Ollama provides a simplified experience for managing and interacting with LLMs. Because of its accessible model management system, users can easily browse, download, and switch between models with just a few clicks, thereby eliminating the need for command line expertise. Most importantly, this ease of access helps demystify AI technology for new users.

Furthermore, the app supports a range of powerful features. For example, users can conveniently drag and drop files such as text documents or PDFs, enabling smart summarization and insightful Q&A functionalities. In addition, the inclusion of multimodal capabilities—such as analyzing images alongside text with models like Google’s Gemma 3—broadens the scope of tasks that the system can perform. Therefore, whether you are dealing with complex datasets or simply experimenting with creative projects, Ollama’s features are tailored to meet diverse needs.

Why Choose Local AI on Windows?

Running LLMs locally by using Ollama on Windows offers distinct advantages compared to cloud-based solutions. Because your prompts and sensitive data remain on your device, you enjoy enhanced privacy and security. This is particularly significant for enterprises handling confidential information and individuals who value their digital privacy.

Moreover, local operation guarantees instant access to AI functionalities. There are no server queues or downtimes, which means that interruptions are minimized. In addition, by eliminating monthly cloud subscription fees, users can substantially reduce costs. Besides that, local AI systems can be optimized for performance, offering faster response times with modern dedicated GPUs as highlighted on the CyberNews review.

Getting Started with Ollama on Windows

Getting started with Ollama on Windows is designed to be straightforward and hassle-free. Users simply need to download the installer, launch the application, and then choose from an expanding selection of open-source models, including options from Google, DeepSeek, and Qwen. This streamlined process makes it simple to integrate state-of-the-art AI into everyday projects.

In addition to its easy plug-and-play setup, Ollama runs a local server that is accessible via a user-friendly graphical interface. The presence of the Ollama icon in your system toolbar ensures that the app is never more than a click away. Because the app also supports integration with tools like OpenWebUI, savvy users can incorporate it into more complex workflows. Therefore, both novices and experts will appreciate the flexibility and robustness of the platform.

Key Use Cases for Ollama on Windows

Ollama on Windows serves a variety of needs across different sectors. For research and document analysis, users can drag lengthy PDFs or datasets into the app to create summaries, extract key insights, or generate automated Q&A reports. Most importantly, this capability streamlines data processing, thereby enhancing productivity.

Similarly, software developers find value in submitting code files for automated explanation and documentation. Furthermore, the multimodal features facilitate creative projects such as design reviews and image-text integrations. In addition, those seeking private AI chat experiences benefit from real-time, secure conversations that never leave the local device, as noted in the Mitja Martini review.

Performance and Model Selection

Ollama offers impressive performance with the ability to select context lengths that meet your specific needs. Most notably, some models support up to 128k tokens, rivaling many cloud-based solutions in capacity and performance. Because of this, users can process lengthy and complex documents with ease.

In addition to robust model performance, the platform supports a wide variety of LLMs including DeepSeek, Gemma, and Qwen. This diversity ensures that no matter the application, there is a model available that meets your requirements. Besides that, the platform’s increasing popularity is evidenced by its support for hundreds of millions of downloads worldwide, demonstrating its scalability and reliability.

Comparing Ollama to Other Local AI Tools

While other solutions like LMStudio offer local LLM functionalities, Ollama distinguishes itself with its focus on ease of use and its expansive feature set. Because of its user-friendly design and smooth integration with existing tools, it is a particularly attractive option for both casual users and professionals. Most importantly, Ollama’s commitment to privacy through local data handling makes it a standout choice.

In addition, Ollama continuously evolves with regular updates that incorporate the latest advancements in AI technology. Therefore, while some proprietary tools may offer niche features such as real-time visual previews, Ollama’s comprehensive approach to managing multimodal data and ensuring user privacy provides a balanced, high-quality experience. As highlighted on the NVIDIA blog, the integration of RTX and local LLMs represents a promising future for desktop AI solutions.

Final Thoughts

Ollama’s new Windows app redefines what local AI can do by merging powerful AI tools with a user-friendly interface. Because the app ensures all data remains local, users can confidently manage sensitive documents, experiment with cutting-edge LLMs, or simply explore the benefits of private AI chat without cloud dependence. Most importantly, this approach represents a step forward in democratizing access to advanced AI technology.

Besides that, with continuous updates and a growing ecosystem of models and tools, Ollama is well-positioned to meet evolving user needs. Therefore, whether you are a developer, researcher, or casual user, this platform promises to deliver significant improvements in productivity and security. With its impressive blend of performance, privacy, and ease of use, Ollama is truly pioneering the next chapter of local AI innovation.