Quantum computing has long been a tantalizing promise, hinting at a revolution in computational power that could transform fields from drug discovery to material science. Today, industry giants such as IBM and Google are more confident than ever that scalable quantum computers—machines capable of fault-tolerant and truly useful computation—could become a reality within this decade. This exciting progress not only builds on years of experimental breakthroughs but also signals a turning point where quantum research meets pragmatic application. Most importantly, this progress could bring transformative benefits across a diverse range of industries.

Because quantum systems leverage qubits that can exist simultaneously in multiple states, they introduce possibilities largely unattainable with conventional computers. Therefore, as researchers overcome obstacles related to error-prone operations and environmental disturbances, the computational landscape is shifting at an unprecedented speed. Besides that, both IBM and Google are investing heavily in research, collaboration, and technological infrastructure, paving the way for a future where quantum computers seamlessly integrate with existing technologies.

What Does “Scalable Quantum Computing” Really Mean?

Traditional computers rely on bits, representing either a zero or a one, to process data. In contrast, quantum computers harness qubits that can represent both zero and one at the same time, offering exponential scaling when correctly deployed. This unique property means that, for certain types of problems, quantum computers can process complex calculations much faster than classical systems. Most importantly, this exponential power could revolutionize areas like cryptography, optimization, and simulation.

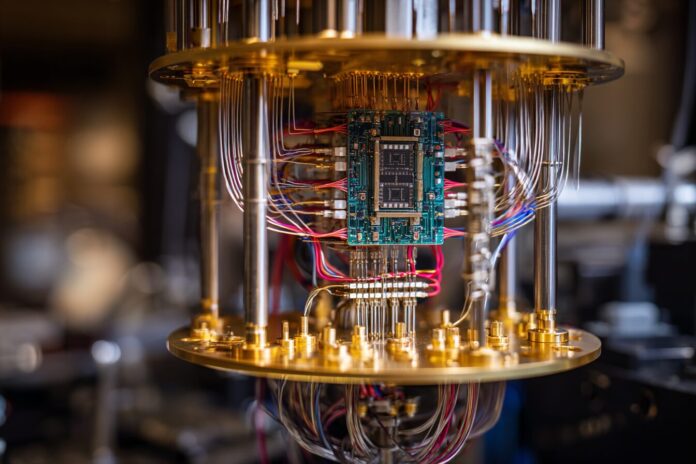

Because of these distinctive capabilities, researchers have been committed to overcoming significant technical challenges. For instance, maintaining qubit integrity against noise and system errors poses an immense hurdle. Therefore, the path to scaling up quantum systems involves developing advanced error correction protocols and designing hardware that minimizes environmental impact. As highlighted in recent discussions on IBM’s roadmap and Scott Aaronson’s analytical insights, every incremental improvement brings us closer to realizing robust and scalable quantum architectures.

IBM’s Roadmap Toward Fault-Tolerant Quantum Computing

Since 2020, IBM has shared its quantum computing roadmap openly, marking clear milestones that illuminate the path ahead. Because transparency is critical in a rapidly evolving field, IBM’s public benchmarks have helped demystify the process of moving from experimental setups to reliable, fault-tolerant systems. Notably, IBM is targeting 2029 for the unveiling of its quantum system Starling, which is expected to execute 100 million quantum gates on 200 logical qubits. This leap, compared to its 5,000 gate performance on the Nighthawk hardware in 2025, underscores the company’s ambitious objectives.[1]

Most importantly, IBM’s focus on enhancing error correction algorithms combined with modular hardware architectures promises to mitigate the challenges posed by qubit instability. In addition, improvements in real-time error correction frameworks—such as the implementation of bivariate bicycle codes on FPGA or ASIC systems—are setting the stage for more stable and scalable quantum systems. As explained in greater detail on IBM’s Quantum Roadmap, these technological innovations are part of a systematic strategy to make quantum computing commercially viable.[3]

Google’s Willow Chip: A Leap Toward Logical Qubits

Google’s Willow quantum processor has represented a noteworthy advance in overcoming the error challenge. The processor, which features 105 physical qubits, has demonstrated the feasibility of exponential error reduction through innovative surface code error correction. Because increasing the number of qubits now contributes to better system stability, Willow has successfully scaled logical qubit grids from 3×3 to 7×7, halving the error rate with each upgrade.[4]

Most importantly, the success of Willow establishes a new benchmark for achieving a state known as “below threshold,” where adding more qubits actively improves error resilience rather than compounding faults. Because maintaining errors below the threshold is crucial for practical application, this breakthrough not only confirms the viability of scalable quantum architectures but also accelerates the timeline for future quantum systems. As Scott Aaronson explains in his blog, these developments provide strong evidence that scalable, commercially usable quantum hardware is nearer than many previously believed.[2]

Why Now? From the Utility Era to Fault Tolerance

Quantum computing is experiencing a pivotal transition from the noisy intermediate-scale quantum (NISQ) era to what experts are calling the “utility era”. Because early systems struggled to outperform classical computers, it was difficult to justify significant investments in quantum hardware. However, as demonstrated by both IBM and Google, advancements in hybrid quantum-classical algorithms, chip connectivity, and error correction have begun to tip the scales.

Most importantly, this shift is driven by the growing demand for computational power in real-world applications. Because quantum processors are now beginning to solve tasks that lie outside the realm of classical simulation, industries from logistics to pharmaceuticals are eyeing these developments with keen interest. Therefore, the enthusiasm for scalable quantum technology is well-founded, and the next few years will likely see an acceleration in both research and early commercial deployments. Additional insights on this evolving era can be found in comprehensive analyses and video updates on the subject, such as the recent YouTube briefing on quantum breakthroughs.[Video]

Potential Impacts Across Industries

The arrival of scalable quantum computers is poised to disrupt multiple industries. In pharmaceuticals, quantum simulations can reveal complex molecular interactions, leading to the discovery of new drugs and advanced materials. Because these computers can run calculations that would take traditional supercomputers millennia, they open the door to breakthroughs in areas such as personalized medicine and predictive analytics.

In finance and logistics, quantum-enhanced algorithms may streamline operations by optimizing supply chains and investment portfolios. Most importantly, this computing revolution could lead to novel financial models and more efficient risk assessments, reshaping entire sectors. Because of these profound impacts, major corporations are already investing in quantum technologies, as detailed in analyses like those on Biforesight’s quantum computing coverage.[4]

Remaining Barriers and Looking Ahead

Despite these exciting breakthroughs, several challenges remain on the road to fully scalable quantum computing. Because high-fidelity two-qubit gates and the management of large arrays of logical qubits persist as major obstacles, researchers continue to focus on innovative hardware solutions. Most importantly, the integration of error correction across broader system architectures is critical to ensuring reliable, fault-tolerant operation at scale.

Because research breakthroughs are arriving at a rapid pace—often within months rather than decades—the quantum computing landscape is evolving much faster than many anticipated. Therefore, every new advancement, whether through IBM’s modular frameworks or Google’s innovative Willow chip, brings us closer to a future where quantum processors become indispensable in both scientific discovery and everyday industry applications. This continuous momentum underscores the urgency and potential of quantum technology as it stands on the brink of a transformative era.[2]

Further Reading and References

For those interested in exploring the intricate details of these developments, further reading is available through several authoritative sources. IBM’s detailed discussion on fault tolerance can be found on their blog, and a comprehensive outline of their future plans is presented on the IBM Quantum Roadmap. Besides that, Scott Aaronson’s blog post offers an insightful analysis of the Google Willow processor’s success and its implications for the quantum future.

Additionally, the evolving relationship between theoretical progress and practical applications is well documented in recent industry reviews, such as those on Biforesight. Because the utility era is now here, understanding these sources provides both context and depth for enthusiasts and professionals alike.