Revolutionizing Edge AI: Meet Nemotron Nano 2 9B

The landscape of edge AI is evolving rapidly, and Nemotron Nano 2 9B stands at the forefront of this transformation. Announced by NVIDIA in August 2025, this model introduces a hybrid architecture that pushes boundaries in both reasoning accuracy and computational efficiency. Most importantly, the emergence of this model highlights how the integration of advanced AI design can reshape real-world deployments.

Because AI applications now demand agile performance combined with cost-effectiveness, Nemotron Nano 2 9B provides an innovative solution that addresses these exact needs. Therefore, industries ranging from customer service to analytics can expect a significant boost in operational efficiency. Furthermore, with its scalable design and adaptive reasoning capabilities, this model paves the way for the next generation of edge computing solutions.

What Sets Nemotron Nano 2 9B Apart?

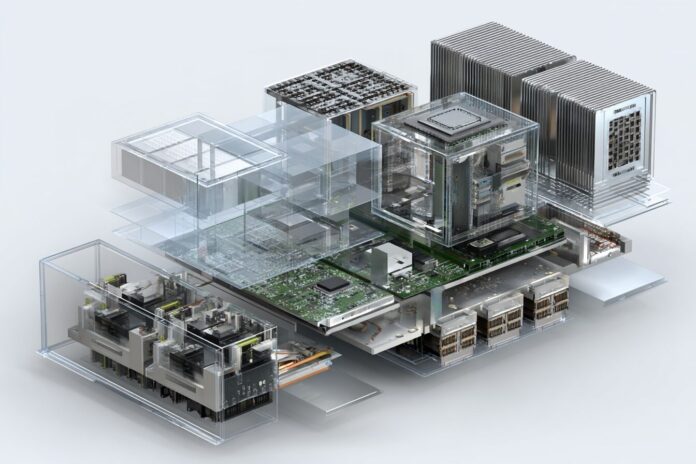

This cutting-edge model fuses Mamba-2 and Transformer architectural elements, creating a hybrid design that redefines throughput and accuracy. Besides that, the redeployment of self-attention layers into Mamba-2 processing techniques enables the model to reach up to 6x higher throughput compared to similarly-sized competitors while preserving top-level results. Most importantly, this design improves token generation speed and optimizes handling of extensive reasoning workloads.

Because the requirements for edge AI are continuously growing, Nemotron Nano 2 9B stands out by addressing scalability and cost challenges head-on. As detailed in sources like the NVIDIA Technical Report and supported by developers on platforms like Hugging Face, this model ensures both immediate performance improvements and long-term operational benefits.

Hybrid Architecture Highlights

- Model Size: 9B parameters

- Architecture: Hybrid Mamba2-Transformer

- Throughput: Up to 6x faster than other open models in its class

- Cost Efficiency: Novel “Thinking Budget” cuts reasoning inference costs by as much as 60%

- Language Support: English, coding languages, and major global languages like German, French, Italian, Spanish, and Japanese

- Optimized For: Customer service agents, analytics copilots, support chatbots, retrieval-augmented generation (RAG), and RTX/edge deployment

Innovative Thinking Budget: Control Cost and Performance

The introduction of the “Thinking Budget” is a game-changing innovation that offers developers the ability to manage inference costs meticulously. Because the inference process can be costly, especially when handling lengthy token sequences, this feature allows users to set precise limits on token processing. This control mechanism integrates seamlessly with cost-saving strategies on the edge.

Most importantly, the Thinking Budget provides transparency and agility for organizations. Therefore, businesses can finely tune the balance between cost, latency, and accuracy. Additionally, this functionality supports rapid adjustments based on workload demands, making it a critical asset for dynamic and scalable deployments.

Technical Advancements: Efficient Training and Deployment

Nemotron Nano 2 9B started its journey as a robust 12-billion-parameter base model pre-trained across 20 trillion tokens with precision-tuned FP8 techniques. Because of extensive training and aggressive optimization strategies, NVIDIA succeeded in compressing and distilling the model to a lean, efficient 9B-parameter version using the innovative Minitron approach. This strategy emphasizes efficiency in both training and inference processes.

Besides that, the final model is engineered to run on a single NVIDIA A10G GPU while supporting up to 128k tokens at bfloat16 precision. As a result, this technical advancement minimizes energy usage and hardware costs without sacrificing performance. Moreover, the integration of feedback from deployments and continuous updates from sources like the NVIDIA Developer Blog ensures the model remains at the cutting edge of AI research and development.

Performance: Benchmark Results and Edge Readiness

Benchmark tests reveal that Nemotron Nano 2 9B consistently achieves equal or superior accuracy compared to other leading open models such as Qwen3-8B. Because it excels in tasks ranging from mathematics and science to complex code reasoning, this model is especially effective in scenarios that demand precision and speed. Most importantly, its higher throughput directly translates into improved performance in edge environments where rapid inference is essential.

Furthermore, the robustness of this model under real-world conditions is evident in its exceptional handling of lengthy input-output traces. Therefore, when deployed on edge servers and integrated into customer service systems, the Nemotron Nano 2 9B not only increases efficiency but also reduces overall operational costs. These performance benchmarks make it a strong candidate for next-generation scalable AI applications in diverse industries.

Global Availability and Open-Source Commitment

NVIDIA has embraced an open-source approach with the release of the Nemotron Nano 2 9B model. Because the model weights are available on platforms like Hugging Face and via upcoming endpoints on NVIDIA’s own platform, developers have unprecedented access to cutting-edge AI technology. Most importantly, the detailed technical documentation ensures that users can integrate, adapt, and improve upon the existing model design without significant hurdles.

Additionally, comprehensive datasets and post-training resources further empower the AI community. Therefore, researchers and developers worldwide can experiment, validate, and enhance edge AI solutions. This commitment to openness not only accelerates innovation but also fosters a collaborative ecosystem, as highlighted by updates on the NVIDIA Developer Blog.

Developer Use Cases and Next Steps

Nemotron Nano 2 9B is designed with a wide array of practical applications in mind. Because it supports multiple languages and boasts robust performance attributes, the model is ideal for interactive AI agents and analytical copilots that require razor-sharp reasoning. Most importantly, it delivers the performance needed for real-time, on-device decision support systems.

Therefore, edge customer service chatbots, sophisticated analytics systems, and even robotics applications can benefit from using this model. In addition to these use cases, developers are encouraged to explore its potential through direct experimentation available on NVIDIA and Hugging Face platforms. With a focus on energy efficiency and speed, the model is positioned to meet the growing demand for rapid, cost-effective AI solutions at the edge.

Why Nemotron Nano 2 9B Redefines Edge AI

Enterprises are under increasing pressure to deploy AI models that provide instantaneous responses, enhanced accuracy, and minimal energy consumption. Because Nemotron Nano 2 9B offers a unique blend of high throughput, cost efficiency, and flexible reasoning capabilities, it clearly redefines what is possible in edge AI deployments. Most importantly, it represents a significant leap forward in combining state-of-the-art theoretical research with real-world applications.

Besides that, the innovative design and open-source availability of the model set a new industry standard for both large-scale inference and dynamic, cost-sensitive applications. Therefore, organizations looking to boost their AI capabilities can leverage this model to drive better customer experiences, more efficient data analytics, and overall improved operational outcomes. As industries continue to integrate smarter AI solutions, the Nemotron Nano 2 9B stands as a benchmark for future innovation in edge computing.

References

- NVIDIA Nemotron Nano 2 Technical Report

- Hugging Face: Supercharge Edge AI With High-Accuracy Reasoning Using NVIDIA Nemotron Nano 2 9B

- NVIDIA Nemotron Nano 9B V2 – Hugging Face Repository

- Gigazine: NVIDIA releases Nemotron Nano 2